Pandas To Parquet

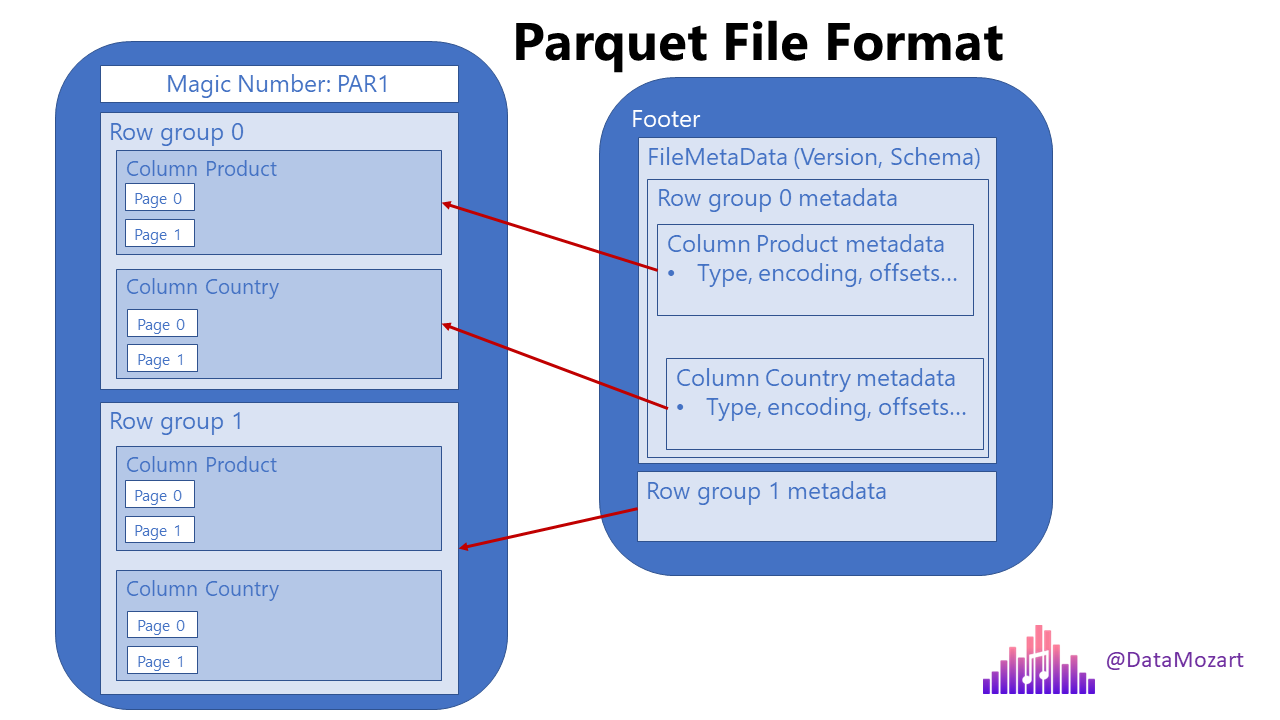

Pandas To Parquet - February 20 2023 In this tutorial you ll learn how to use the Pandas to parquet method to write parquet files in Pandas While CSV files may be the ubiquitous file format for data analysts they have limitations as your data size grows In this article I will demonstrate how to write data to Parquet files in Python using four different libraries Pandas FastParquet PyArrow and PySpark In particular you will learn how to retrieve data from a database convert it to a DataFrame and use each one of these libraries to write records to a Parquet file

Pandas To Parquet

Pandas To Parquet

pandas.DataFrame.to_parquet¶ DataFrame.to_parquet (path, engine = 'auto', compression = 'snappy', index = None, partition_cols = None, ** kwargs) [source] ¶ Write a DataFrame to the binary parquet format. This function writes the dataframe as a parquet file. You can choose different parquet backends, and have the option of compression. df_test = pd.DataFrame (np.random.rand (6,4)) df_test.columns = pd.MultiIndex.from_arrays ( [ ('A', 'A', 'B', 'B'), ('c1', 'c2', 'c3', 'c4')], names= ['lev_0', 'lev_1']) df_test.to_parquet ("c:/users/some_folder/test.parquet") The last line of that code returns: ValueError: parquet must have string column names

4 Ways To Write Data To Parquet With Python A Comparison

Pyspark FileNotFoundError When Using Pandas To parquet But Path Works

Pandas To ParquetDataFrame.to_parquet(path: str, mode: str = 'w', partition_cols: Union [str, List [str], None] = None, compression: Optional[str] = None, index_col: Union [str, List [str], None] = None, **options: Any) → None [source] ¶ Write the DataFrame out as a Parquet file or directory. Yes pandas supports saving the dataframe in parquet format Simple method to write pandas dataframe to parquet Assuming df is the pandas dataframe We need to import following libraries import pyarrow as pa import pyarrow parquet as pq First write the dataframe df into a pyarrow table

Why data scientists should use Parquet files with Pandas (with the help of Apache PyArrow) to make their analytics pipeline faster and efficient. GitHub Datahappy1 csv to parquet converter Csv To Parquet And Vice Pandas Read parquet How To Load A Parquet Object And Return A

How Do I Save Multi indexed Pandas Dataframes To Parquet

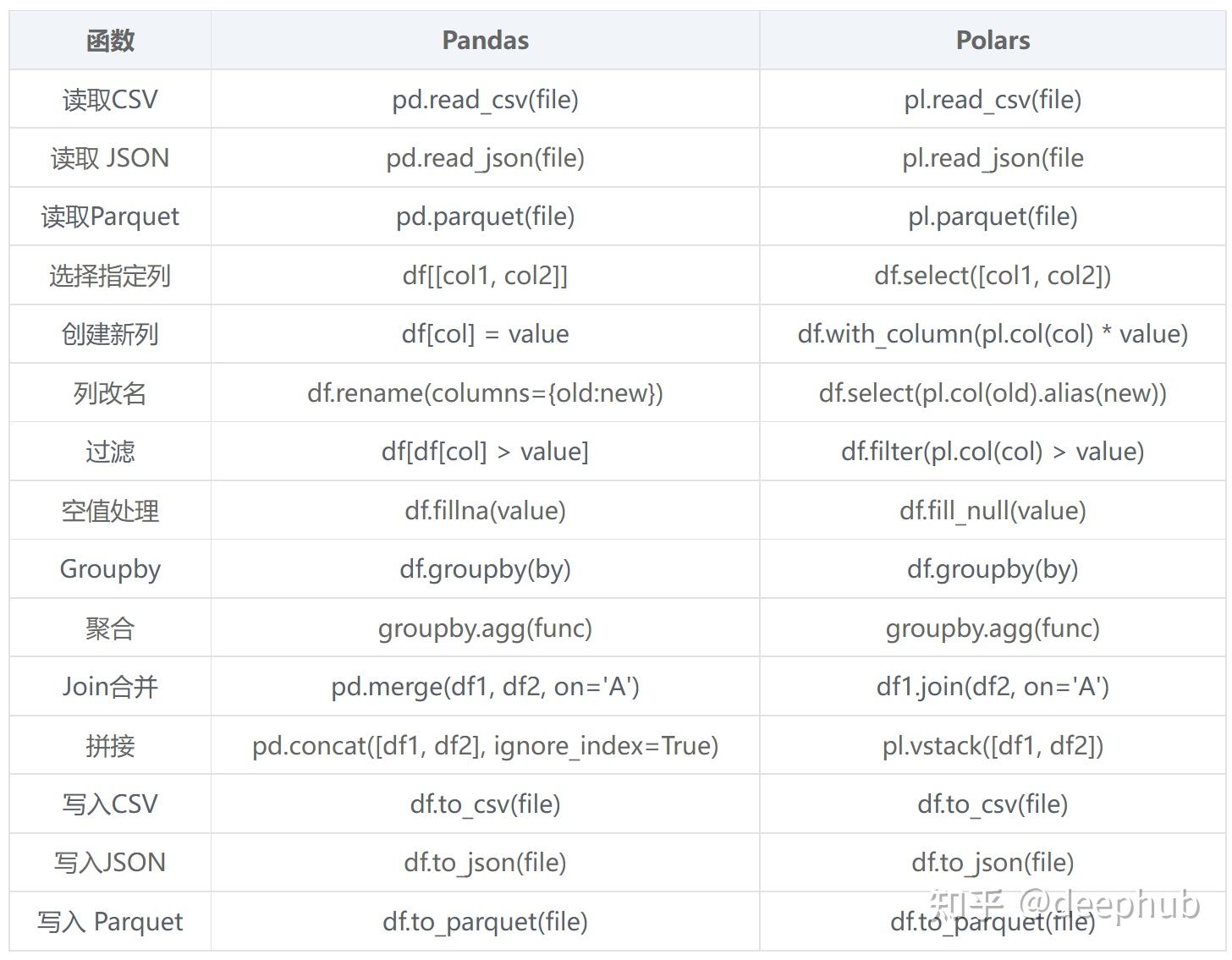

Exporting CSV Files To Parquet With Pandas Polars And DuckDB YouTube

Pandas to parquet file. I am trying to save a pandas object to parquet with the following code: LABL = datetime.now ().strftime ("%Y%m%d_%H%M%S") df.to_parquet ("/data/TargetData_Raw_ {}.parquet".format (LABL)) ArrowTypeError: ("Expected bytes, got a 'float' object", 'Conversion failed for column Pre-Rumour_Date with type object') Contributing Temporian

Pandas to parquet file. I am trying to save a pandas object to parquet with the following code: LABL = datetime.now ().strftime ("%Y%m%d_%H%M%S") df.to_parquet ("/data/TargetData_Raw_ {}.parquet".format (LABL)) ArrowTypeError: ("Expected bytes, got a 'float' object", 'Conversion failed for column Pre-Rumour_Date with type object') Python Parquet Storage BUG Load ORC format Data Failed When Pandas Version 1 2 0 dev0 Issue

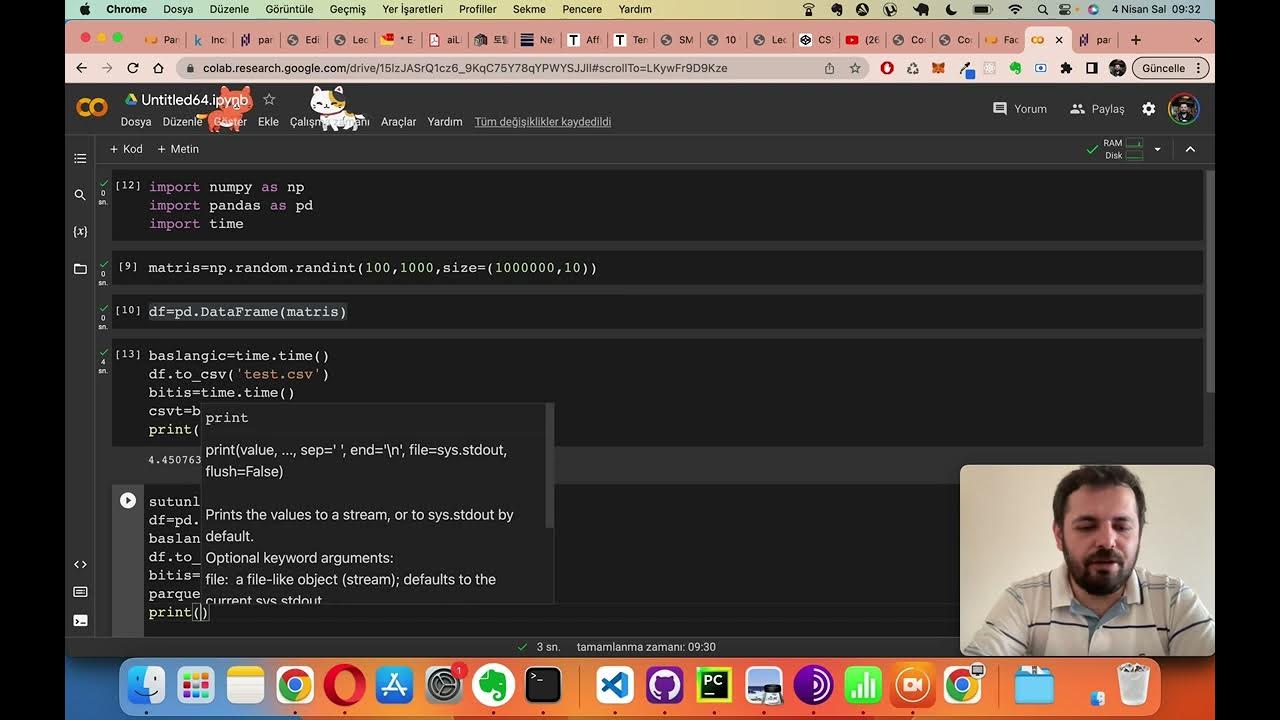

Pandas Parquet to parquet Metodu Pandas H zland rma YouTube

Convert Parquet To CSV In Python With Pandas Step By Step Tutorial

Peter Hoffmann

Peppa Pig Panda Activities Moment Tap Lunch Box Collage Magazine

Pandas Polars ETL

Write Pandas DataFrame To Parquet File

Pandas To parquet Error ArrowNotImplementedError No Support For

Contributing Temporian

Pandas FutureWarning In To parquet With Length 1 Partition cols

Streaming Parquet To CSV With Python Polars A Step by Step Guide DevHub