Databricks Get Spark Config

Databricks Get Spark Config - Method3 Using third party tool named DBFS Explorer DBFS Explorer was created as a quick way to upload and download files to the Databricks filesystem DBFS This will work with both AWS and Azure instances of Databricks You will need to create a bearer token in the web interface in order to connect Nov 29 2019 nbsp 0183 32 Are there any method to write spark dataframe directly to xls xlsx format Most of the example in the web showing there is example for panda dataframes but I would like to use spark datafr

Databricks Get Spark Config

Databricks Get Spark Config

Jun 21, 2024 · The decision to use managed table or external table depends on your use case and also the existing setup of your delta lake, framework code and workflows. Your understanding of the Managed tables is partially correct based on the explanation that you have given. For managed tables, databricks handles the storage and metadata of the tables, including the … Aug 22, 2021 · I want to run a notebook in databricks from another notebook using %run. Also I want to be able to send the path of the notebook that I'm running to the main notebook as a parameter. The reason for...

Databricks Writing Spark Dataframe Directly To Excel

Azure Databricks 03 Creaci n Y Configuraci n Nuestro Primer Cluster

Databricks Get Spark ConfigThe Datalake is hooked to Azure Databricks. The requirement asks that the Azure Databricks is to be connected to a C# application to be able to run queries and get the result all from the C# application. The way we are currently tackling the problem is that we have created a workspace on Databricks with a number of queries that need to be executed. Nov 29 2018 nbsp 0183 32 Databricks is smart and all but how do you identify the path of your current notebook The guide on the website does not help It suggests scala dbutils notebook getContext notebookPath res1

Jul 24, 2022 · Is databricks designed for such use cases or is a better approach to copy this table (gold layer) in an operational database such as azure sql db after the transformations are done in pyspark via databricks? What are the cons of this approach? One would be the databricks cluster should be up and running all time i.e. use interactive cluster. How To Update Custom Environment Variables For A Databricks Cluster Day02 MLOps IT IT

Python How To Pass The Script Path To run Magic Command As

Databricks File System DBFS Overview In Azure Databricks Explained In

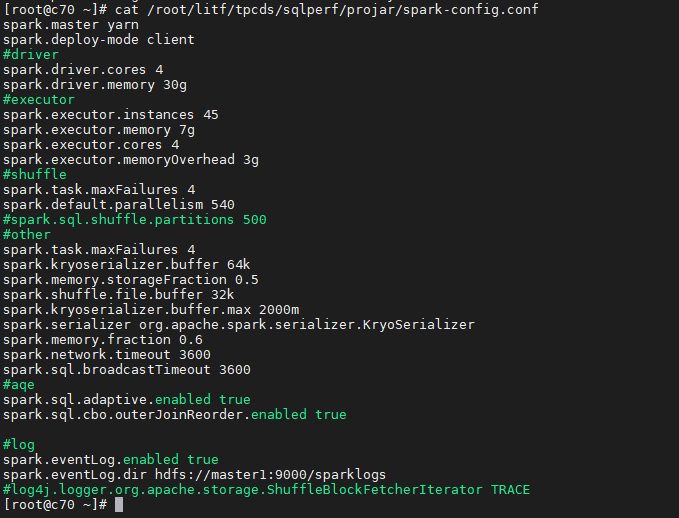

Sep 1, 2021 · How do we access databricks job parameters inside the attached notebook? Asked 3 years, 10 months ago Modified 10 months ago Viewed 30k times C7 16x c7a 16x 1T TPCDS

Sep 1, 2021 · How do we access databricks job parameters inside the attached notebook? Asked 3 years, 10 months ago Modified 10 months ago Viewed 30k times C7 16x c7a 16x 1T TPCDS C7 16x c7a 16x 1T TPCDS

DIY Coil On Plug Ignition Conversion Cheap And Easy Sequential Or

Framework To Create DDL DELTA Tables Using JSON Databricks Tutorial

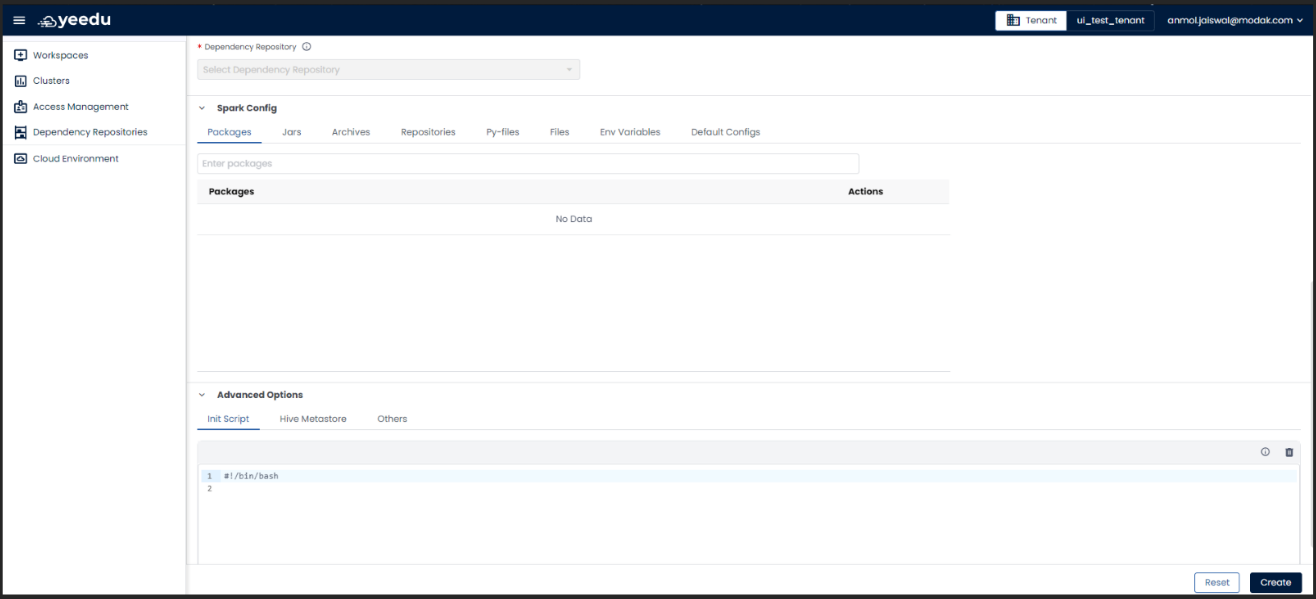

Clusters Yeedu Documentation

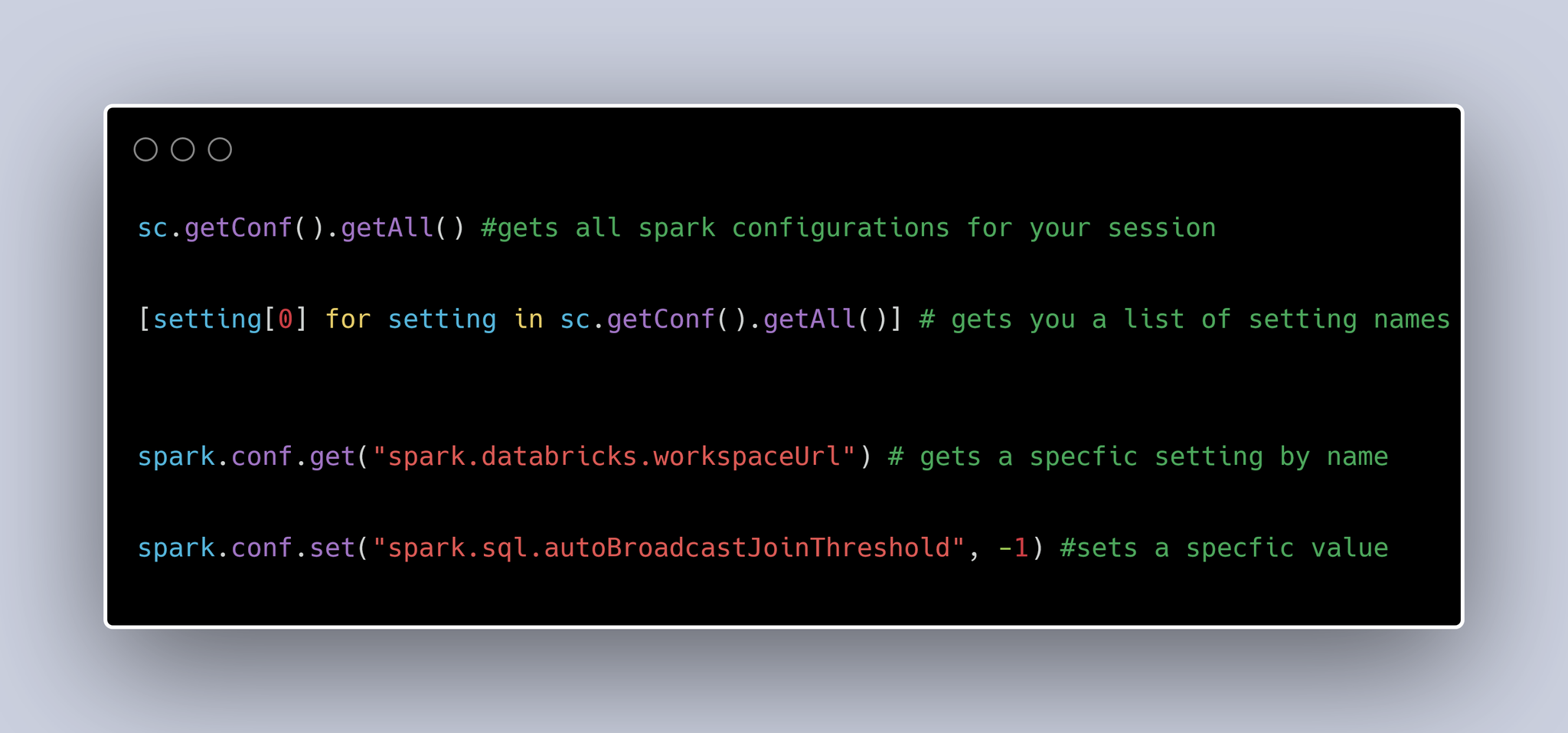

Daily Databricks On Twitter Spark Config Is Responsible For

Databricks Logo PNG

Which Warrior Are You On Your Birthday Fandom

Spark Lineage Ingestion

C7 16x c7a 16x 1T TPCDS

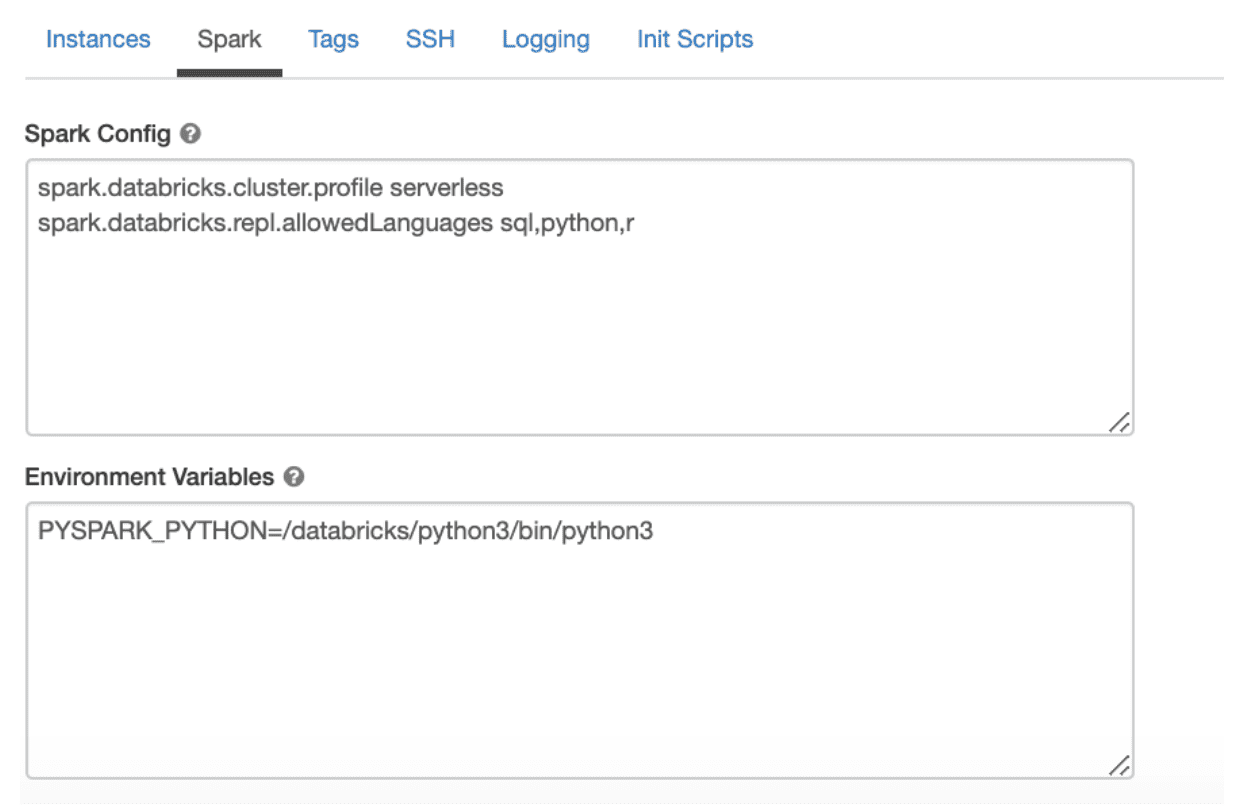

Databricks On AWS Prateek Dubey

Spark Lifestyle Maikii